Build a Google Assistant Bot for Firebase application using Actions on Google and Firebase Cloud Functions as Backend.

After attending Google IO 2017, where they launched actions.google.com for building custom Google Assistant bot I was super excited and wanted to try something with Google Assistant, while this was running in my mind I also wanted to do something around Firebase. Both seemed like the perfect mix and I ended up building a Google Assistant for our Firebase project. This article explains about how we built it with a use case of our community GDG Chennai.

I’ll go through all the steps right from creating a Firebase project to connecting it with our Google Assistant bot built with Actions.

Create a Firebase project.

Let’s start this by first creating a Firebase project. If you’re having an existing Firebase project up and running, then you can skip this step.

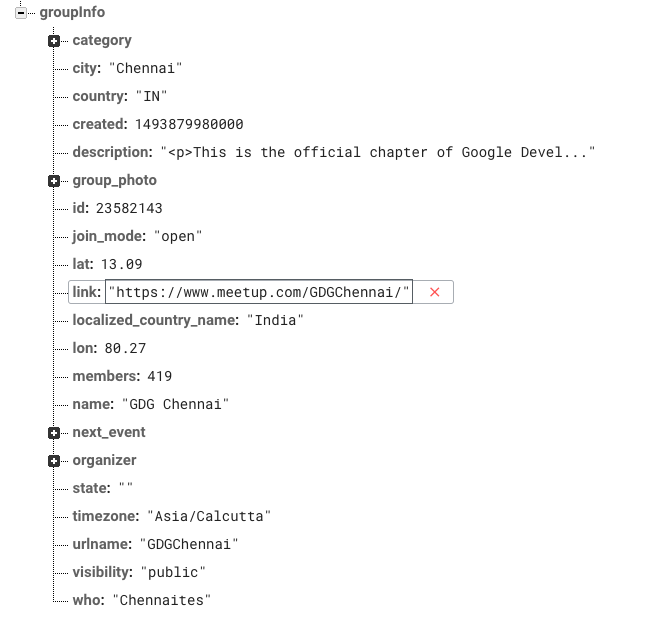

We created a project and used the data from our Meetup page and this app will hold all the data related to our community info and our upcoming events. Basically, using the Meetup api we imported the data and added it to Firebase.

Sample Data in Firebase Project.

Sample Data in Firebase Project.Add Actions on Google To the Project

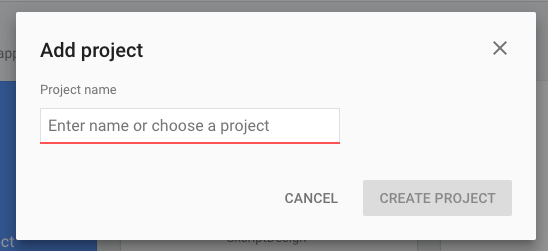

Now go to https://developers.google.com/actions/ and create a project there by importing the above Firebase app.

Project creation in Actions on Google.

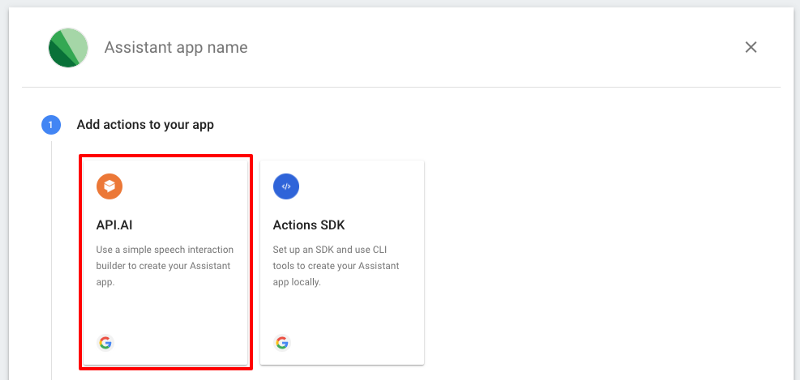

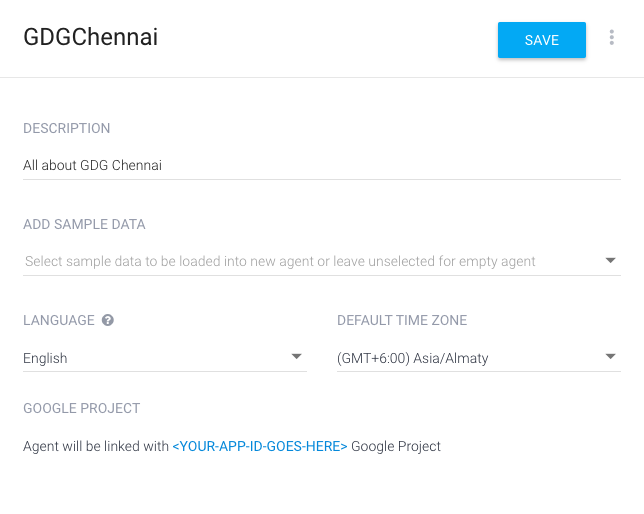

Project creation in Actions on Google.The next step is to connect our project to API.ai which will be the brain of our application where you’ll define the intents and entities. To get started click on the API.Ai

Now click on **CREATE ACTIONS ON API.AI **button and it will take you to the api.ai and login there and create a project there and make sure that the your google project is chosen by default at the bottom.

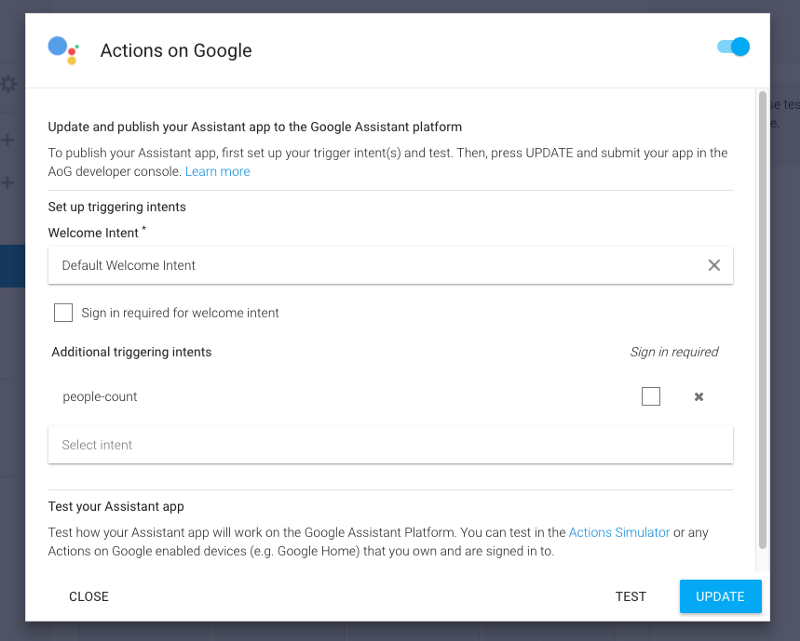

Now to complete the setup process of API.ai go to integrations sections and click on *Actions on Google *block and hit update on the modal that’s shown.

Now your Actions on Google Project is connected with API.ai project. Now go back to the Actions project console fill up the other informations related to your project. Show more focus while filling up the invocations section as this is how you’ll be calling your application in Google Assistant. Once everything is set, click on the test button and it’ll open up the simulator where we can test our bot.

Defining Intents in API.AI

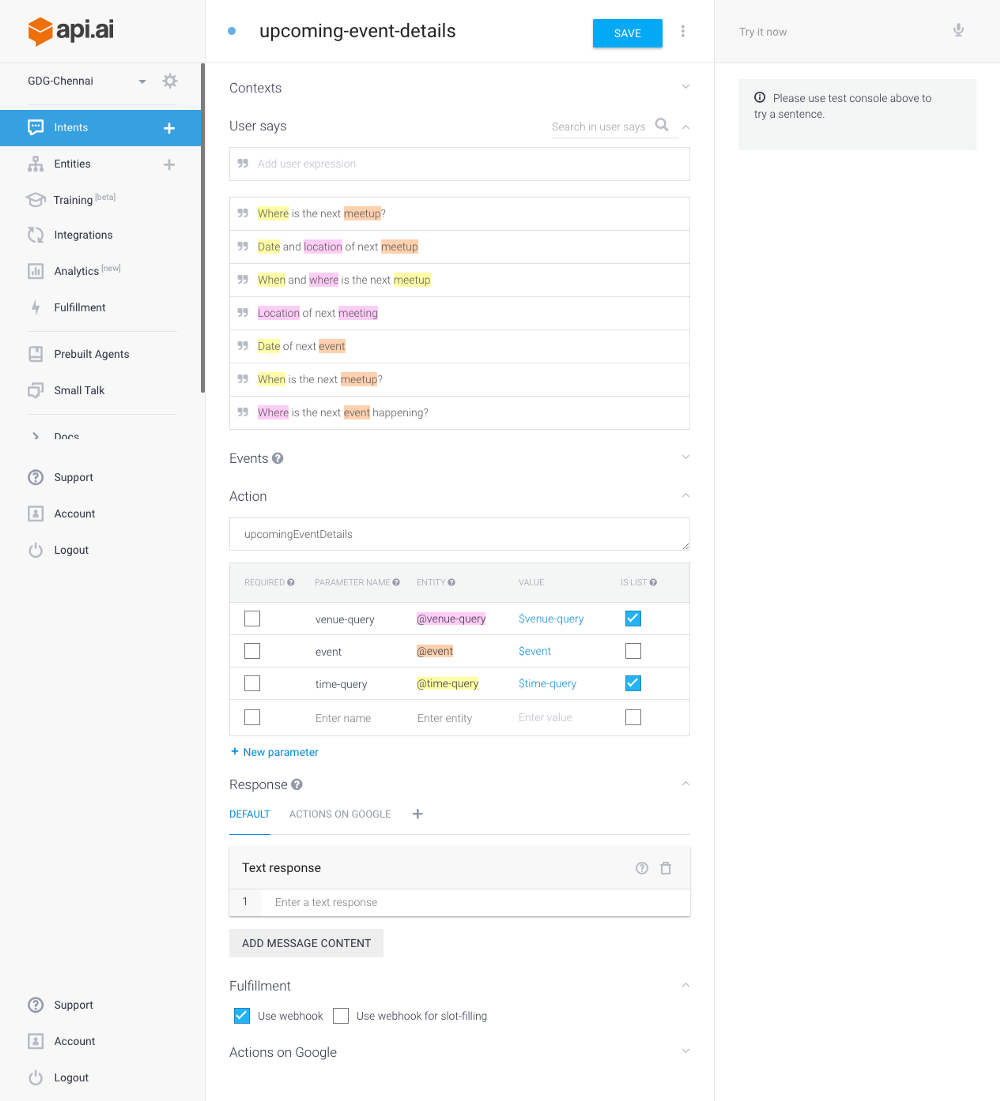

Now let’s create simple intent that will be used to get the details about the upcoming event by the community.

Click on the plus button in the intent section of sidebar, I created sample intent with few user inputs and mapped the entities in the inputs.

Here upcoming-event-details is the intent name and we’ve a list of user commands that will invoke this intent. In each command few words are highlighted that are mapped with corresponding entities. For example the words where location venue are representing the same thing so we created a entity named venue-query and mapped them to it. So when ever a user gives these words it can be classified and fetched as a parameter which can later be applied in our rules to classify.

Also mentions which action to be called whenever this intent is called. Here I’m using upcomingEventDetails for better convention.

Here make sure that you use Webhook checkbox enabled under fulfillment.

Building the backend.

So if you need the bot to work with most custom operations you need to build a backend to which the API.AI gives the request after classifying the intents. Since our project and the data is on Firebase we’ll use Firebase cloud functions as our backend.

We need to build a simple http server which can get the request from API.AI and return back the request. You can read here about how to use firebase cloud functions to build a HTTP RESTFul API.

All we need is a single endpoint, thus we created a function with HTTP triggers as mentioned in the above article.

exports.assistant = functions.https

.onRequest((request, response) => {

});

We’ve a node client for Actions which can simplify our process of building the backend.

npm install actions-on-google --save

And reference it inside like below

let ApiAiApp = require('actions-on-google').ApiAiApp;

Now we’ll use ApiAiApp variable to handle the response, getting out the params, and other stuff.

To manage all the intents we create an object which has the collection of intents and it’s callback functions.

const ACTIONS = {

'upcomingEventDetails': {callback: upcomingEventDetailsHandler}

};

Now we can easily map the incoming intent to it’s corresponding handler function.

const app = new ApiAiApp({request: request, response: response});

let intent = app.getIntent();

app.handleRequest(ACTIONS[intent].callback);

So when the user asks any one of the given statements mentioned in thenupcoming-event-details intent the app.getIntent() will return the action name upcomingEventDetails

In order to return a response we’ll get the data and build the statement. This will be handled by the handler function and that can be called using

app.handleRequest(ACTIONS[intent].callback);

Also for getting the information data from the Firebase database I wrote a function which can be called whenever needed.

function getGroupInfo(options) {

firebase.database()

.ref('groupInfo')

.once('value')

.then(function(snapshot){

var info = snapshot.val();

options.onSuccess({info: info});

});

}

And the handler function for the upcoming details actions will be,

function upcomingEventDetailsHandler(app) {

console.log('Came to Upcoming Event Details');

const fromFallback = app.getArgument('fromFallback');

const timeQuery = app.getArgument('time-query');

const venueQuery = app.getArgument('venue-query');

var message,

statement,

statements = [];

getGroupInfo({

onSuccess: function(data) {

console.log(timeQuery);

console.log(venueQuery);

if (timeQuery) {

statement = "It is happening on " + moment(data.info.next_event.time).format("MMM Do YY") + ".";

statements.push(statement);

}

if (venueQuery) {

var venue = data.info.next_event.venue || "yet to be announced.";

statement = "The venue is " + venue;

statements.push(statement);

}

message = statements.join(" ");

console.log(message);

app.ask(message);

}

})

}

Here the parameters time-query & venue-query is fetched to check whether the user is asking about venue or time or both. Based on the condition we’re building the statements and returning using the following function,

app.ask(message);

This app.ask() is very useful and important as this will automatically build the JSON format that API.AI and Actions will understand for all the use cases like text and speech.

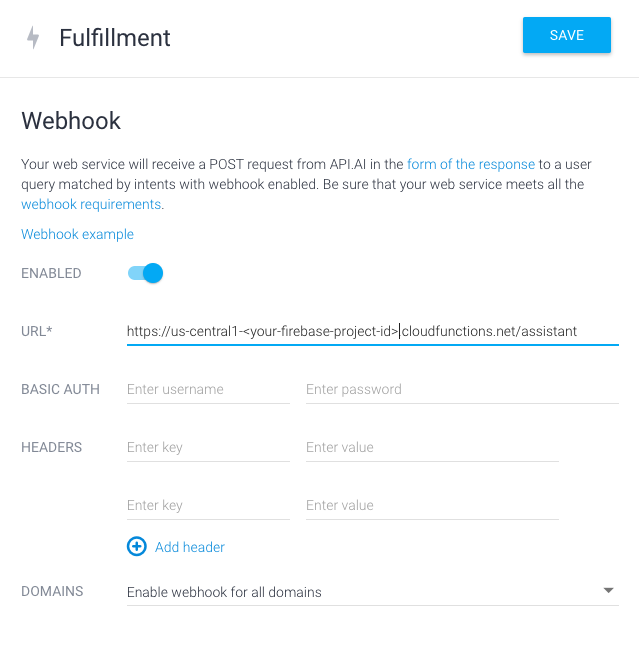

Connecting the webhook.

Now as the backend webhook is ready we will connect it with our API.AI so that it can forward the request to our webhook. Just go to **fulfillments **and enter the url and enable webhook for all domain

With this process the entire setup of the basic query is done. You can test it with the simulator after updating the project in API.AI.

Demo Time

The best part here is that we’re completely serverless and using cloud functions directly so it’s much easier and faster.

The Future Plan

I’ve planned to build more commands into it and build one such bot for the entire GDG Community, where you can ask more questions. Believe me it’s fun :D

If you’re looking to build such a bot, feel free to contact us, we’d love to work with you :)

Up next

Leveraging on transfer learning for image classification using Keras Skcript

/svr/your-own-google-assistant-bot-for-your-firebase-project/

/svrmedia/heroes/gafirebase.png

Skcript

/svr/your-own-google-assistant-bot-for-your-firebase-project/

/svrmedia/heroes/gafirebase.png